AI-Artificial Intelligence today is a Russian Overlapping Doll set of technologies which drive how AI is used, modified and self-generated. Yes, AI tools are used to refine themselves. The remarkable coding feat is AI Tools are actively used to loop on themselves to generate better AI tools in a process called transformative generator models or Generative AI. The key tool in AI technology is LLM-Large Language Models which themselves are built from huge, multi-billion record data files which in turn create parameter files of trillions of records.

Note what is happening in AI coding:

1)AI datafiles are huge in size starting at 100K and quickly reaching trillions of very large records;

2)

So AI-Artificial Intelligence is exactly that, built on databases of billions of carefully curated records which in turn help spawn trillions of parameter records which in turn are fed back into full AI Models with one new driver, carefully crafted – transformer rules. Before you get lost in the numbers, here are some must see presentations that succinctly summarizes the history and rapid change of Artificial Intelligence on not just computing but modern living in general:

The remarkable facts about Artificial Intelligence is that for its 70 years of existence from WWII computing marvels to 2002 AI research has been almost a backwater in computing. Yet with two breakthrough innovations in 2005 [the use of training data in new tabular layouts] and 2012 [use of Nvidia GPUs in huge $multi-million investments] AI prospects took off spectacularly reversing the trends of early AI Winters. Now AI growth is expected to dwarf both Computer and Internet markets in its swift rise and impact on World Economies projected in the Jan 2024 Royal Institute – Turing Lecture:

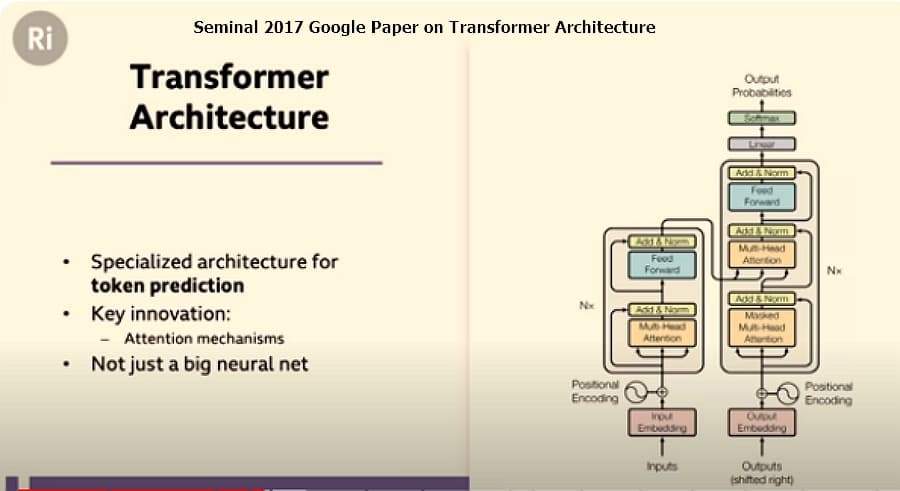

Prior to 2002 AI was better known as Machine Learning and confined to specialized classification tasks used by governments and the military. But what happened in 2005 was a 3-5x improvement in speed and lower costs for these tasks as special software helped classify massive amounts of tabular data. This was followed in 2012 by the Nvidia 166x speed of processing boost. Then in 2017, Google’s transformer approach introduced LLM technology.

The transformative models spawned an array of new LLMs with new capabilities:

- GPT-3 and GPT-4 from OpenAI, with 175 billion data records and 1.5 trillion parameters respectively, covering over 95 natural languages and 12 code languages.

- LLaMA from Meta, with 1.2 trillion parameters, covering 50 natural languages and 14 code languages.

- PaLM2 from Google, with 600 billion parameters, covering 101 natural languages and 12 code languages.

- BERT from Google, with 340 million parameters, covering 104 languages in multilingual model.

- BLOOM from BigScience, with 176 billion parameters, covering 46 natural languages and 13 code languages.

These LLMs have been used to power Facebook, Google, Baidu. Microsoft tools broad consumer and business apps. But the the most vigorous innovations

2)Large Language Models and The End of Programming – Harvard Lecture by Matt Walsh

3)Outrageous State of the Art of AI Directions –NVIDIA CEO Jensen Huang Leaves Everyone SPEECHLESS

First, is the and complete data g nature of the lests generatas shown in this wikipedia report which has some of the most currenon whats moving [and with great pace] in LLMs. However, though WordPress leads the way with innovative pagebuilders there are 6 massive $3B tools which use LLMs in state of the art AI development. But first lets look at the top 6